Did you know that about 90% of the over 40,000 car accident deaths in the U.S. each year are due to driver mistakes? This fact highlights the urgent need for new ways to travel, like self-driving cars. These vehicles aim to cut down on accidents by reducing human error. Yet, most car makers have only reached Level 2 autonomy.

Big names like Tesla and Ford are leading the way. But, we’re not there yet. In this article, we’ll look at how far we’ve come, what’s holding us back, and what the future holds for self-driving cars. We’ll also talk about the need to balance new tech with safety.

Key Takeaways

- Most automakers are at Level 2 autonomy, focusing on partial control.

- Accidents involving self-driving features raise big safety worries.

- Building public trust is key for self-driving cars to become part of our lives.

- Brands like Tesla have set the bar high with lots of real-world testing.

- Getting to Level 3 autonomy is essential for cutting down on accidents.

- Rules for self-driving cars need to be updated fast to boost safety and innovation.

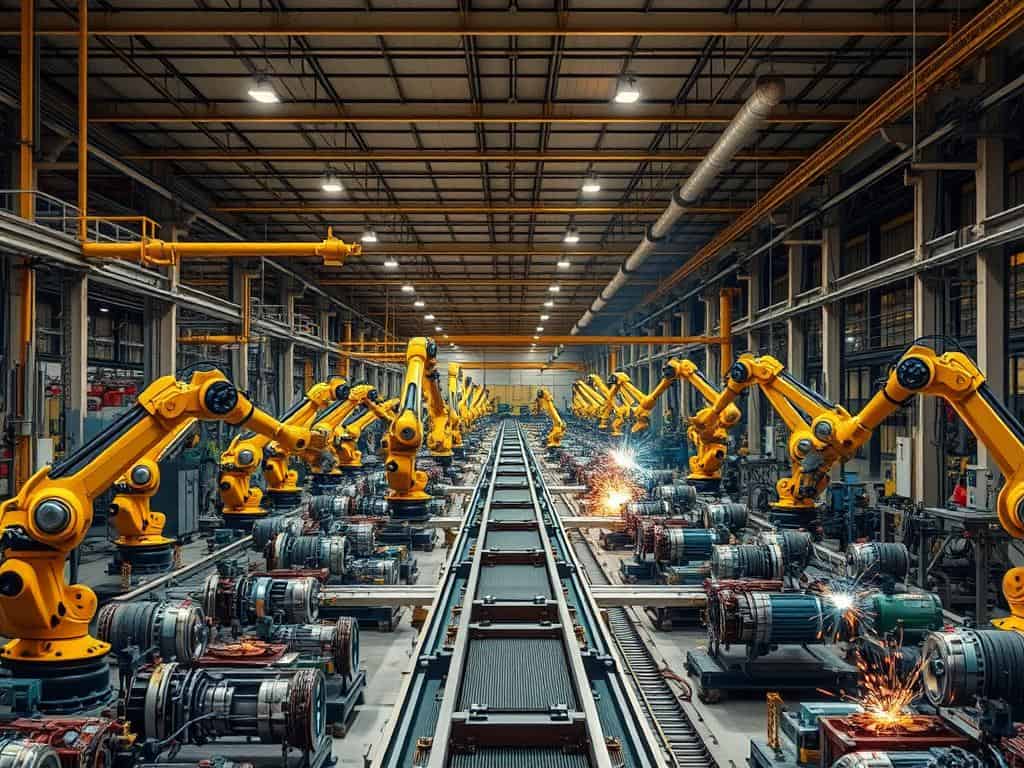

The Current State of Self-Driving Cars

The world of self-driving cars is changing fast. New tech and public views are shaping it. It’s key to understand what self-driving cars are and the different levels of automation out there.

Understanding Autonomous Vehicles

Autonomous vehicles, or AVs, use advanced tech to drive on their own. They have sensors like radar, cameras, and LiDAR to see around them. This tech helps them stay in their lane and avoid crashes, but they need a driver for now.

Levels of Autonomy in Self-Driving Cars

The Society of Automotive Engineers (SAE) has levels from 0 to 5 for driving automation. Level 0 means no automation, and Level 5 means full autonomy. Today, Level 2 is the top tech in cars, like Tesla’s Full Self Driving and Ford’s BlueCruise.

Real-World Testing and Challenges

Companies are testing self-driving cars in real life, but they face big challenges. Tests in cities show many problems, like safety worries and rules to follow. Crashes involving self-driving cars have made people more cautious. The NHTSA wants companies to be open about any accidents with their systems.

Challenges Facing Self-Driving Cars

The journey to make self-driving cars common faces many hurdles. One big challenge is the technology itself. Cars can’t always handle sudden surprises on the road, which makes safe driving tricky.

Technological Limitations

Reports often talk about the tech issues with self-driving cars. These cars need to quickly understand and act on many driving situations. Even with new tech, they struggle in real-world tests. To overcome these hurdles, we need better algorithms and sensors.

Safety Concerns and Incidents

There’s always something new about safety with self-driving cars. Accidents in places like California and Arizona have raised big questions. These incidents make us think about who’s to blame and the ethics of using these cars.

Statistics show the risks are real. For example, in just 11 months, there were almost 400 crashes involving these systems.

Public Trust and Acceptance

Getting people to trust self-driving cars is key. Being open about how AI works can help build trust. When people understand the tech, they feel safer using it.

Building trust is vital. It affects how well people and cars work together. And it’s important for society to accept these cars.

Conclusion

Looking at the future of self-driving cars, we see both promise and challenges. The tech behind them is getting better, but safety and trust are big hurdles. About 70% of Americans are worried about fully self-driving cars.

Research and testing have shown some problems, like more accidents and hacking risks. But, self-driving cars could also help a lot. They could cut down traffic in big cities and help the elderly and disabled.

To make self-driving cars work, we need strong rules and better safety standards. This will help solve the big problems and make self-driving cars safe for everyone. It’s a step towards a future where they’re part of our daily lives.